Geroline

Student Perception and Attainment in an Online German Language Course

Mathias Schulze, Grit Liebscher, Waterloo, and Su Mei Zhen, Changsha

The Geroline project comprised the development of and research for two elementary and one intermediate German language courses which are delivered online for university-level distance education. The course materials include a commercially produced textbook package and our online materials which constitute a task-based learning environment by providing guidance and structure to the learning process. We discuss relevant aspects of the pedagogic design of our learning environment, its theoretical foundations and aspects of a study of the efficacy of the learning design. Insights into student perception of different course components and aspects were gained through the analysis of qualitative data from questionnaires and interviews. Our analysis of the numeric test data shows that there is no significant difference in attainment between the group who studied online and largely independently and the groups who were taught on campus. Using a task-based language teaching framework for our online courses helped students who chose to study online to achieve similar learning outcomes as their peers in the on-campus groups.

1. Introduction

In Fall 2001, the Department of Germanic and Slavic Studies at the University of Waterloo (Ontario, Canada) started the Geroline project[1], the research for and the development of online elementary German language courses for distance education. The development of three courses (Elementary German I and II (GER 101, GER 102) and Intermediate German I (GER 201)) was completed in December 2003. Currently, we are adapting the three courses to the new and revised edition of Lovik et al. (2007). Early plans for this project are outlined in Liebscher & Schulze (2002). Other results of our data analysis have been documented: an error analysis (Lee, 2003), a comparative analysis of comprehensible input and output in the data (Drashkaba, 2005), an analysis of the task design (Jiang, 2006), as well as a discussion of task-based learning and community of practice (Liebscher & Schulze, 2004). Further computer-aided analyses of the interlanguage texts produced by the two elementary German groups is still underway.

In this paper, we contribute to the current discussion of language teaching online – see e.g. the special issue of the CALICO Journal What does it take to teach online? Towards a Pedagogy for Online Language Teaching and Learning (Stickler & Hauck, 2006) – by presenting relevant aspects of the pedagogic design of the learning environment we created for these courses. Online language learning has been discussed for a wide variety of more advanced learners. For an example of German teaching online see Eigler (2001). Less is known about the effectiveness of task-based learning designs in online courses for early language learners. It has even been argued that online learning is not suitable for elementary language courses. Spodark (2004, p. 86), for example, shows for her French course that the work with authentic French websites would be too demanding for early language learners. However, we can show with our courses, which have been offered in ten consecutive terms since their launch, that the online medium itself is suitable for these learners as long as the instructional design and content is geared to their needs. In the second part of this paper, we discuss findings of our study in which we investigated the efficacy of our online language courses in terms of student attainment and looked at the perception of such a new learning environment by university students.

2. Course Design

Two important guidelines for our online course development were:

· To utilize the teaching materials (Lovik et al., 2002) which had already been adopted for the on-campus sections in distance education teaching. This enables students to switch between on-campus and distance-education sections from one semester to the next. The compatibility of distance education and on-campus courses proves particularly useful to students on co-op programs, who normally alter academic terms with work terms during which they might take one or two courses via distance education. The use of the same teaching materials is also more efficient for instructors and developers because they rely on the expertise gained in using the textbook materials, albeit with earlier editions, since 1999.

· To create accompanying online learning materials which compensate for aspects of the learning process which are found in classroom teaching, but are difficult or impossible to arrange for a distance education section. We did this to ensure the comparative quality of the learning experience in all sections of one course independent of whether they are taught face-to-face or online.

Our experience was in some aspects similar to that by Strambi and Bouvet (2003), who discuss the pedagogic challenges they encountered when designing elementary language courses for French and Italian, in that we initially relied on the experience of the Department of Distance and Continuing Education of our University. This meant for us working with colleagues who had a wealth of expertise in instructional design, but had little to no awareness of language teaching methodology.

The learning impact study presented here can be viewed as a necessary measure of quality control. We wanted find out whether students working with these materials and with the task-based, online learning design are afforded the same opportunity to study successfully as their peers in the on-campus sections of the same course. Comparing our current courses to the previous version with print materials and audio cassettes, we note the advantages of the speedier just-in-time delivery of learning objects and assessment items online. We are then asking whether the task-based design of these online courses will be suitable for early language learners? To sum up, we believe that there are four features of our three online courses which are specific to our online language courses:

· Our online materials function more as a complex and detailed study guide – similar to an explicit instructor model – in that our system tells students what to do when and how, but most learning objects are situated outside of the online course in the textbook, workbook, interactive CD and audio CDs.

· Our material covers three consecutive one-semester courses (3 ´ 12 teaching weeks + examination period). All instructional as well as organisational information is available online. Instructional guidance and linguistic feedback, submission of oral and written task results and grading, student-student and student-instructor interaction – all are done online. The only exception is the final written examination which is taken in one of our examination centres and is done on paper.

· Our courses have been conceived in the task-based language teaching framework which is suitable for course design in technology-rich environments (Skehan, 2003).

· The collaboration of distance education learners in the target language is facilitated through the extensive use of discussion boards for language practice, semi-public writing and peer-feedback. Discussion boards which function as a group portfolio and a frequently-asked-questions discussion thread provide an English-language forum for students to discuss study-related questions.

2.1 Task-Based Language Teaching

“Task-Based Language Teaching … constitutes a coherent, theoretically motivated approach to all six components of the design, implementation, and evaluation of a genuinely task-based teaching program: (a) needs and means analysis, (b) syllabus design, (c) material design, (d) methodology and pedagogy, (e) testing, and (f) evaluation” (Doughty & Long, 2003, p. 50). It was this approach which informed our course design. We agree with Eckerth (2003, 2003) who prefers tasks (Lernaufgaben) which have some relevance for our learners over fictitious tasks (see e.g., Skehan, 1998, p. 143), but we would not go as far as Börner (1999) and describe fill-in-the-blank exercises as tasks. However, we do see a link between language learning tasks and the teaching and learning of structural elements – grammar and vocabulary. We see tasks as “a vital part of language teaching” (Skehan, 1996, p. 39), but not as the sole unit of instruction. In our course design, tasks are preceded by smaller subtasks which in turn are prepared by topics and tutorials (see 1.2). In our understanding of task we follow Willis’ very practical definition: “a goal-oriented communicative activity with a specific outcome, where the emphasis is on exchanging meanings, not producing specific language forms” (1996, p. 36), which appears to be widely accepted (Bygate et al., 2001, p. 11; Ellis, 2003, p. 3; Skehan, 1996, p. 38). As Willis suggests (p. 42), our tasks are preceded by pre-task activities and followed by reflection as a post-task activity (Levy & Kennedy, 2004; Skehan, 1998, p. 149). Our students prepare for each subtask (see 1.2) by working on a number of topics and engage in practical language use in tutorials. The latter are basically a set of recognition, practice and application exercises. We would not deny that the (simplified) sequence topic-tutorial-task is reminiscent of the so-called 3Ps approach to language teaching methodology – present, practice, produce (c. Skehan, 1998, pp. 94-95). This way, we are providing a familiar structure to each tasks for our students – a structure which they could simply follow, but could also move away from if they think it facilitates their learning. The subtasks themselves can also be seen as pre-task activities in that they are facilitating successful completion of the main task. The task results are submitted electronically. Students receive individual feedback on each task submission and are expected to work with the feedback as a post-task activity. We are mainly utilising the web as a delivery medium for instructional messages and as a tool for task completion and submission. Unlike in some other courses (e.g., Ros i Solé & Mardomingo, 2004), in which the web itself forms the backbone of the task-based design, we are using the online technology exclusively to link students with each other, their off-line learning resources, and their instructor(s).

As far as the task design is concerned, it is important to adapt language learning tasks to the online medium or create new suitable tasks for this medium. Knight (2005) comes to the same conclusion after his study of task design in computer-mediated communication. Our tasks make use of the technology context, e.g. students introduce themselves to the group in German at the end of chapter 1 which is necessary since they really had not had any prior personal contact at this stage, they leave a message on an answer machine by recording the text on the phone, they write a short piece about Waterloo for German-speaking visitors which could appear on one of the many web pages about the region.

Egbert and Yang (2004) propose eight conditions for efficient task-based language learning in, what they call, classrooms with limited technology:

1. Learners have opportunities to interact socially and negotiate meaning.

2. Learners interact in the target language with an authentic audience.

3. Learners are involved in authentic tasks.

4. Learners are exposed to and encouraged to produce varied and creative language.

5. Learners have enough time and feedback.

6. Learners are guided to attend mindfully to the learning process.

7. Learners work in an atmosphere with an ideal stress/anxiety level.

8. Learner autonomy is supported. (pp. 284-285)

We believe that these conditions also apply to an online distance education course. The following ambitious principles state the language learning tasks should be:

a. interactive and include reporting back of communicative outcome (Skehan, 2003);

b. collaborative, interesting, rewarding and challenging (Meskill, 1999);

c.

meaningful and engaging rather that

repetitive or stressful (

d. provide opportunities to produce target language (Chapelle, 1998);

e. make use of authentic materials (Little, 1997);

f. be appropriate to the medium used (Furstenberg, 1997).

(Rosell-Aguilar, 2005, p. 420)

We use the identifiers of the conditions (1.-8.) and principles (a.-f.) from above as cross-references to indicate how we tried to meet them in our course design.

2.2 Overview of course components

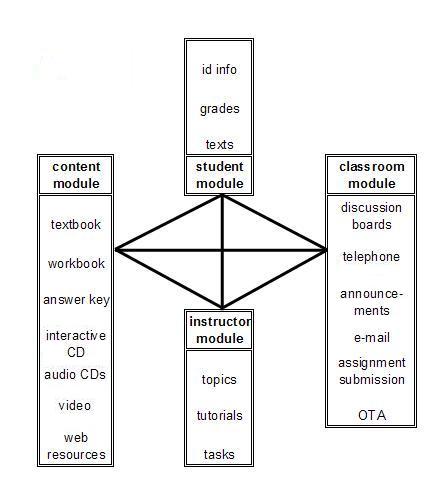

Designing an online course for language learners is an ill-defined task because “identifying a single best-practice pedagogical approach for online learning is impossible” (Felix, 2003, p. 164). We connected our new task-based online environment to an existing textbook package. The relation of different course components is illustrated in Figure 1. The four modules – student, classroom, instructor, and content – are interdependent and interconnected.

The content module. Students who register for one of these courses (GER101, 102, 201) are required to purchase the Vorsprung package (Lovik et al., 2002) which contains the textbook, the Arbeitsbuch consisting of workbook (with writing and grammar exercises), laboratory manual and video workbook (with comprehension exercises), and the Vorsprung Interactive CD-ROM (with grammatical and vocabulary exercises which partially overlap with the workbook), a copy of the answer key for the workbook and the set of ten audio CDs. The video clips can be accessed by all students from our language laboratory servers. Web resources (online exercises and web quests) are made freely available by the publisher. The print materials, the interactive CD as well as the textbook-related website and the departmental website already contain a wealth of written exercises. Therefore, we concentrated on providing learning support and guidance in the form of hypertext documents and channels for student-to-student, student-to-group communication. (conditions 4, 5, 6; principles d, f)

Figure 1 : Geroline course material

The classroom module. Students enrol in distance education courses at the University of Waterloo for a variety of reasons: Firstly, many of them are prevented from taking a particular course on campus because they live at some distance from the university, or other commitments (e.g. work, child care) impose severe time constraints. Secondly, the University of Waterloo is the largest provider of co-operative education in Canada, so a number of students enrol for distance education courses during their work terms. Others find the on-campus course and/or section of their choice over-subscribed and register with the distance education section for this reason. And lastly, some students simply prefer to take a course via distance education because it offers them greater independence and flexibility or because they perceive this version of the course to be easier. Through our online environment, we provide all of them with the opportunity to meet other students of their group. Through the learning management suite – UW-ACE (http://uwace.uwaterloo.ca) – students have access to a variety of online discussion boards. It facilitates the implementation of task-based learning objects or entire courses and relies on the ANGEL course management system by Angel Learning (http://www.angellearning.com).

In the two English-speaking discussion boards, students report their progress at the end of each week and can see how the others are moving forward (My Portfolio) and they have a forum for airing questions about any matter related to the course (Questions-Answers) (conditions 6, 8; principle b). The German discussion boards are used for small writing tasks and exercises (open-ended questions) and essentially as a semi-public writing space. The students of one group (normally between 5-15 with a maximum of 20) can see what their peers have written, they are encouraged to reply to postings by others and also to provide feedback. Of course, students at this level of second-language competence find it extremely difficult to notice errors in somebody else’s writing and then to provide accurate feedback. However, we believe that the quality of the feedback is not what is important, it is the fact that students thoroughly read and comment upon the submissions of their peers. In most cases, this is going to result in the provision of additional comprehensible input (Krashen, 1982). On the other hand, the multitude of small discussion boards linked to tasks (see below) and exercises offers students an opportunity to produce comprehensible output (Swain, 1985). Due to the semi-public nature of these boards, students are motivated to take more care (conditions 5, 6; principles a, b, c, d, e, f) when producing the foreign-language texts because they know that these texts will be read not just by one person, but by some if not all members of their study group. Instructors monitor the discussion boards, but they seldom interfere. At the end of the semester, each student receives a participation mark of maximally 10%, which is only based on their contributions to the discussion boards because these are the only accessible evidence for language learning activity apart from texts submitted as assignments.

The instructors communicate with the students – individually or as a group – usually through announcements posted via the course management tool or via e-mail. All students hand in written assignments via submission textboxes. Feedback, annotations and grades are provided via the course management tool UW-ACE. Oral assignments and one or two oral exercises are submitted using the Oral Task and Assignment Tool (OTA) – a tool which was tested in these courses for the first time. Students use the telephone to record their assignments, they identify themselves and the assignment they wish to submit by pressing numeric IDs on their touch-tone telephone keypad, listen to the oral prompts and instructions and start recording. Their submission is immediately streamed from the OTA server to the course management server so that both student and instructor can listen to the submitted recording. The instructor provides individual as well as group feedback also by using the telephone. Using the telephone as a recording device ensures that all students can do the tasks without any technical difficulty and that the instructor receives audio files for feedback and grading which are all of the same digital format. Of course, this approach is still far off from a mediated yet natural conversation between two students or a student and an instructor. The implementation of multimodal conferencing in online distance education courses is still rare and remains under-researched (Hampel, 2003). Hampel also reports the technical problems they experienced with the sophisticated setup of the Lyceum software at the Open University in Britain. In spite of the fact that we too had to overcome some technical support problems on the server side of the OTA system, we would argue that our approach has also been an effective one due to us creating communicative tasks which are suitable for the medium, e.g. leaving a brief voice mail. Increased efficiency was due to shortening the time period between submission of and feedback for an oral task or assignment compared to mailing cassettes.

The instructor module. The Vorsprung materials (Lovik et al., 2002) were not written as self-study materials. Hence, when creating our online distance education courses, we had to provide students with guidance as an instructor would do in class. Apart from correction, feedback and encouragement which is given on the basis of the individual assignment submissions or done as peer-feedback, we felt it important to (1) make the structure of the material more transparent, (2) indicate the importance and relevance of certain topics, (3) make possible learning motivation explicit, (4) complement the explanation in the materials with further detail we thought our students might need.

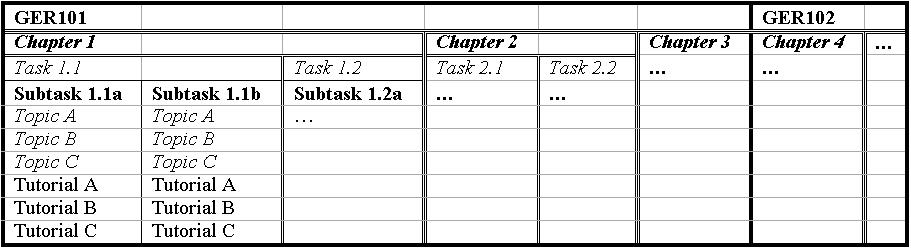

The instructor module is provided as a hypertext environment. The underlying structure which organizes the course materials is shown in Figure 2. Each course is based on three chapters in the Vorsprung book. A chapter should be covered in four weeks and contains two communicative learning tasks. Each should be submitted for individual grading and feedback after two weeks work. A task submission is prepared by doing the two subtasks. Results for these are usually not submitted to the instructor, but they are posted to the group discussion board for peer feedback. In these subtasks students are encouraged to respond to submissions in German and to provide feedback in English.

Figure 2: Structure of the instructor module

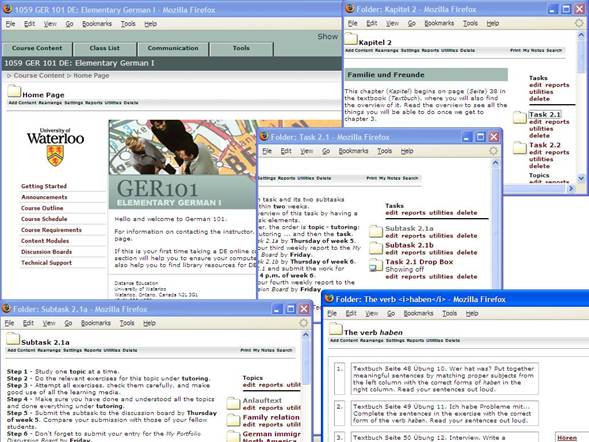

Each subtask is prepared by the students during one week by working through a set of topics – learning resources which introduce new material either on the CD or in the book or both. Students are then required to work through the corresponding tutorial which consists of a set of exercises using learning resources from all elements of the content module (Figure 3). They receive peer-feedback for their subtask submissions. Task submissions are done individually and are graded (six times 10%) and commented upon by the instructor. At the end of the course, students sit a two-hour written examination (30%) in one of the university’s examination centres.

Our online tutors provide feedback on graded task submissions and monitor the discussion boards of the course. Our tutors receive intensive training at the beginning of the academic term in the use of the LMS, feedback provision, grading practices, and on the kinds of students they can expect to have in their groups. As Hampel and Stickler (2005), we believe adequate training for our online tutors to be important.

Figure 3 : Components of Ger 101

The student module. This module contains information about individual students, which pertains to their learning of German. In order to be able to analyze students’ learning we logged all written data students entered both in the discussion boards and the assignment submission boxes and keep the recordings of their spoken texts which they submitted through the Oral Tasks and Assignments tool (OTA). This portfolio of coursework is the basis for the provision of feedback and for grading.

3. The Case Study

Jamieson, Chapelle and Preiss argue that the “question for [CALL] research, then, is to what extent a particular type of CALL material can be argued to be appropriate for a given group of learners at a given point in time” (2005, p. 94). In our case, we wanted to establish how appropriate our task-based language learning design is for online distance education students. The test and questionnaire data is from one 12-week run-through of a GER102 group (see also Table 4 on page 19 ). We had four groups who took the course in the same semester. Students selected their group during registration. They were assured all groups will cover the same material and will be assessed in the same way. They were also informed that one group would only meet once a week and the learning of German would take place in an online environment (group 3). The same experienced instructor taught groups 1 and 2 in the classroom; group 3, the trial group, was taught online by another experienced instructor; and two graduate instructors co-taught the fourth group in the classroom. The situation for groups 1, 2, and 4 is representative of the situation in any given semester. Groups 1, 2 and 4 had four hours of class contact per week including one in the computer language laboratory which prioritised listening comprehension. The setup is that of a standard textbook-oriented, communicative language class, no task-based elements were introduced in these three groups. Group 3 met their instructor only for one hour a week. In this study, we timetabled one hour of face-to-face contact, firstly, as a ‘safety net’ – in case students would not be able to learn successfully in this ‘distance education mode’ with the materials we created; and secondly, to maintain regular contact with them for the purpose of this study. As it turned out, very little time during these weekly contact hours was used to learn German. Often the 50 minutes per week were used for testing (quizzes, lab tasks, lab test – see below), organizational matters and data gathering for the learning impact study.

In spite of not having the students assigned to groups randomly, we had a quasi-experimental setup with one trial group of students who wanted to learn German online and three control groups two of which were taught by the same instructor. All groups used the same textbook package, covered the same amount of material during the same periods which were determined by the same pieces of assessment (e.g. quizzes, lab tests, midterm) administered at the same time.

3.1 Learner perceptions of online learning

A self-completion questionnaire on learner perception of different aspects of the learning design, course delivery and technology, in which all sixteen learners of the trial group participated, was administered shortly before the end of the semester. It had a total of 88 questions, in which learners were mostly asked to rate their answers using different Likert scales (e.g. priority, likeliness, agreement). Two questions asking about study hours were "numeric items" (Dörnyei, 2003). The questionnaire allowed learners complete anonymity, which generally encourages honesty (Sampson, 2003, p. 5). We were not interested in statistical results but comparable data and we focused on the part of the questionnaire, in which learners answered questions about their specific experience of online learning as compared to on-campus or ‘paper-and-pencil’ distance education learning. Below, we provide tables with relevant questions and student responses from the questionnaires.

Student responses concerning the technology (Table 1), our first aspect, provide us with information about learners' ability to handle the technology needed for the course but they also tell us something about the kinds of learners enrolled in the online group.

Indicate how strongly you agree or disagree with each of the following statements:

|

(select only one response per question) |

|||||

SA |

A |

D |

SD |

N/A |

||

Because of the way this course used Conferencing Tools (e.g. Message Board), |

||||||

1. |

I missed important information because the technology doesn’t work correctly. |

1 |

3 |

10 |

1 |

1 |

2. |

I spent too much time learning to use |

1 |

3 |

9 |

3 |

|

3. |

I spent too much time trying to gain access to a computer. |

|

1 |

10 |

5 |

|

4. |

I spent too much time trying to log on to the institution’s computer network/system. |

|

1 |

10 |

5 |

|

5. |

I was less interested in activities that do not involve the use of computers. |

|

1 |

10 |

5 |

|

6. |

I was at a disadvantage, because I do not possess adequate computer skills. |

1 |

2 |

5 |

8 |

|

7. |

I was at a disadvantage because I do not possess adequate typing skills. |

|

2 |

6 |

8 |

|

Because of the way this course used Electronic Communication: |

||||||

8. |

I wasted too much time sorting through my messages to find the few that are useful. |

2 |

3 |

5 |

3 |

3 |

9. |

I usually had to wait a long time to use a computer. |

|

1 |

5 |

10 |

|

10. |

I spent too time trying to log on to the institution’s computer network/system. |

|

1 |

7 |

8 |

|

11. |

I was at a disadvantage, because I do not possess adequate computer skills. |

|

1 |

6 |

9 |

|

12. |

I was at a disadvantage because I do not possess adequate typing skills. |

|

1 |

6 |

9 |

|

Because of the way this course uses Word Processing: |

||||||

13. |

I was at a disadvantage, because I do not possess adequate computer skills. |

|

1 |

7 |

8 |

|

14. |

I was at a disadvantage, because I do not possess adequate typing skills. |

|

1 |

7 |

8 |

|

15. |

I wrote out my work on paper before typing it. |

8 |

3 |

4 |

1 |

|

Table 1 : Student responses about the technological aspect

(SA=Strongly agree, A=Agree, D=Disagree, SD=Strongly disagree, N/A=Not applicable)

Since only one of the 16 learners preferred computer activities over other kinds of activities (question 5), it is evident that this was an average group of language learners in terms of their interest in computer use. As discussed before, it is likely that learners chose the online section over other on-campus sections for reasons other than the fact that this course required more time on the computer. The responses document that the majority of students had no problems handling the technology. As responses to questions 11, 12, 13 and 14 indicate, only one learner, the same learner in each question, felt that he or she did not possess adequate computer and typing skills to handle electronic communication and word processing. The limits of the questionnaire are obvious here in that we do not know why this student had these problems. Slightly more learners indicated that they had inadequate computer and typing skills for the message boards (questions 6 and 7), though there could be a connection between these responses and answers to questions 2 and 8. Some students seemed to have difficulties with the set-up of the message boards. The course had a high number of message boards to engage students in interaction, which were linked individually from the description of each exercise in the online platform. Learners could only get to these boards from the window of the individual exercises or from the link "Course Tools" on the main page. The online platform did not allow easy cross-links. This may have made access to the boards difficult and required learners to search for message board entries rather than providing them with an easy way to get the information they needed. In fact, responses to question 1 seem evidence that some students felt they missed information kept on the message boards. Access to computers and the university network (questions 3, 4, 9 and 10) were not the problem, except for one student (the same student who answered questions 11 to 14 above negatively), a result which has since been confirmed in all run-troughs of the three courses: students did not have access problems. The majority of learners in our study reported that they wrote out their texts on paper before typing them (question 15), a practice often observed in language classes in computer laboratories.

The second aspect to be discussed is how our students managed their time (Table 2).

Indicate how strongly you agree or disagree with each of the following statements: |

||||||

Indicate how strongly you agree or disagree with each of the following statements:

|

(select only one response per question) |

|||||

SA |

A |

D |

SD |

N/A |

||

16. |

I planned specific study times for this course and stuck to the schedule. |

|

3 |

8 |

5 |

|

17. |

Because of the way this course used Conferencing Tools (e.g. Message Board), I was better able to juggle my course work with my work and/or home responsibilities. |

2 |

7 |

4 |

3 |

|

18. |

Because of the way this course used Electronic Communication, I was better able to juggle my course work with my work and/or home responsibilities. |

3 |

6 |

5 |

2 |

|

Table 2 : Student responses about time management

(SA=Strongly agree, A=Agree, D=Disagree, SD=Strongly disagree, N/A=Not applicable)

The responses show that time management is generally a major problem students encountered in their learning. The responses to question 16 are an indication that the large majority of the learners, thirteen out of sixteen, either did not plan specific study times and/or did not keep to their study plan, if they had one. In addition, the online format did not seem conducive to the learners' abilities to organize their work schedules, as responses to questions 17 and 18 show. This is somewhat unexpected, since learners certainly have more freedom for their own time management in a largely self-study course such as this online course, i.e. learners should find it easier to work around other responsibilities in order to do work for the course. It is possible that the self-study nature of the course led to these problems in time management. However, the responses may be an indication that the learners have problems in designing their work and study schedules in general. Evidence of this seems to be their answers to the question, how many hours per week, on average, they spent since the beginning of that term a) for the online course alone and b) for all of the courses combined. In this two-part question about study times, students indicated that their weekly time commitment for this online course ranged from 2 hours to 8 hours, whereby all but three of the learners were below the suggested time commitment in the syllabus of about 8 hours a week. The responses to part b of the question revealed that this is not because their other courses took up too much time, except maybe for one student who spent 40 hours total but only 2 hours on German. In fact, two out of the three learners who spent 8 hours on German also had a 40-hour study week. All the other students did not have a full study week, which could mean either that they did not take a full course load of five courses or that they did not spent enough study time for their courses altogether. We wonder whether the surprisingly low number for overall study time may be due to learners' misreading the question and only counting the hours which they spent studying outside of the classroom. While such misreading may have had an impact on the answers to part b of the question, it would not have had a major impact on their responses to part a, since the online course only had a one-hour seminar once a week.

The last aspect to be discussed are learner perceptions of instructional feedback (see Table 3). As one facet of student support, instructional feedback, especially timely feedback and helpful comments, has been found to be a key issue for the satisfaction of distance education learners (Sampson, 2003). "The response of tutors and 'turn-around time' for comments and grading is cited again and again as being a critical component of student support, with students who receive timely feedback on assignments responding more positively to the course than those who have to wait for feedback" (Delbecq & Scates, 1989 cited in Sampson, 2003, p. 105). In the discussion below, the focus is on assignment feedback, though learners received other kinds of feedback such as answers to questions on the question-answer board and on the exercise boards and oral feedback during the one class hour. Students submitted their assignments electronically and, after marking them up and writing comments, the instructor returned them electronically. In addition to individual feedback, the system also allowed electronic group feedback from the instructor on single assignments.

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table 3 : Student responses about instructional feedback

(SA=Strongly agree, A=Agree, D=Disagree, SD=Strongly disagree, N/A=Not applicable)

The overall result from the questionnaire part on instructional feedback is that most of the learners were pleased with the detail and the quality of the feedback (questions 19, 23, 24). They were less pleased with the turn-around time for feedback on their assignments (questions 20, 21, 22). Compared to on-campus courses or paper-and-pencil distance education courses, however, more learners judged the turn-around time in the online course to be the same or shorter (questions 25 and 28). Compared to these courses, a high number of students also believed that the feedback in the online course was more detailed (questions 26 and 27). In part, these answers might reflect the technical difficulties with the OTA (see above), problems which delayed the feedback on oral assignments significantly.

The questionnaire study has provided some insightful results about how technology, time management, and instructional feedback are perceived by students. These findings provide further evidence to claims made by others. Felix, for example, concludes from her quantitative analyses of student perception data on web-based language learning that the web is “a viable environment for language learning, especially as an add-on to face-to-face teaching” (Felix, 2001, pp. 53-54; see also Felix, 2004, p. 246). Her learners identified “meaningful feedback, logically organized content, easy navigation” (Felix, 2004, p. 246) as the most important factors in their positive perception of online materials.

The technology hardly posed any difficulties for the students. On the other hand, time management was a major problem for learners, not only for the online course but also for their regular courses. Students are usually expected to be able to manage their own time and time management is not taught in regular classes. The study indicates, however, that learners may have to be told, not only how many hours to spent but also how to make up their weekly schedule in order to allow for sufficient study time. Interestingly, the lack of time spent on task did not seem to have an impact on the learning results as reflected in the assessment.

3.2 Comparison of Student Attainment

Table 4 illustrates the data for the two major pieces of assessment – the midterm and the final examination for the four groups. The one-hour midterm examination and the two-hour final examinations are both written and contain a wide variety of exercises on vocabulary and grammatical structures as well as one reading comprehension and one text production task. These data are suitable for statistical comparison because the examination papers are identical for all groups and the instructors of the four groups always grade these tests in a joint session, during which each exercise is graded by the same instructor, providing for a greater amount of consistency across groups. Course content, progression over time and all other pieces of assessment – four quizzes on vocabulary and grammatical structures, four lab tasks on listening and reading comprehension, one lab test on listening comprehension and a speaking test consisting of a short prepared skit and a spontaneous question-answer session afterwards – were also identical for all groups.

|

Group1 |

Group 2 |

Group 3 |

Group 4 |

||||

Midterm |

Final |

Midterm |

Final |

Midterm |

Final |

Midterm |

Final |

|

Number of Students |

16 |

20 |

16 |

17 |

||||

Mean Grade |

71.19 |

71.19 |

72.35 |

73.60 |

73.94 |

74.06 |

69.85 |

66.29 |

Standard Error |

2.94 |

2.72 |

3.93 |

3.71 |

4.07 |

3.27 |

4.18 |

3.83 |

Median Grade |

70.50 |

70.00 |

72.00 |

75.50 |

78.00 |

74.50 |

70.50 |

62.00 |

Stand. Deviation |

11.77 |

10.87 |

17.57 |

16.61 |

16.29 |

13.09 |

17.23 |

15.77 |

Table 4 : Examination Data

The first question we are interested in is whether the fact that students learnt online and independently working through the pre-task activities, the task and the post-task activities made a difference in their performance in the two examinations. Between all four groups, there is no significant difference between the results in both examinations (F < F crit : 0.19 and 1.04 < 2.74, p < .05). In statistical terms this means that all four samples (groups) belong to the same population (students at the University of Waterloo) and their different treatment (different instruction) had no significant effect. Burston argues when reviewing statistical efficacy studies in CALL that “lack of significant difference can be taken to indicate that computer-based instructional paradigms are just as good as traditional classroom teaching” (2003, p. 221).

Concluding her meta-study investigating the effectiveness of information and communication technologies in second language learning, Felix states that “[s]mall positive gains are reported consistently; most of these, however, fall below significance level” (2005b, p. 284). She advises caution in the interpretation of the results of such quantitative studies since the positive effect measured might not be caused by the technology at all. We would argue that success or failure of our courses are more likely linked to other factors such as instructional design and support. Hoven (2006, p. 235) quotes Jonassen (1992) and argues with him that technology does not directly mediate learning, instead learning is mediated by thinking and that it is imperative to consider how learners are required to think when carrying out a particular task. We agree with these statements and would not want to make any claims about the impact of the delivery medium. What we are concluding from our test data is that our combination of students who chose the online option over the in-class experience, the task-based language teaching design on the one hand and a standard communicative, textbook-based language course in class on the other hand – all three in their combination afforded these students with attainment opportunities which were not significantly different.

Even when comparing the overall grades for the course, there is no significant difference between the groups (F < F crit : 0.42 < 2.74, p < .05). This last comparison has some additional limitations: two components of the overall grades – participation and homework as well as oral tests – were graded by individual instructors in their respective groups and the grading standards might have varied and certainly do not conform to test conditions. However, the overall grade is not biased toward proficiency in reading and writing as the two examinations used above are. Oral performance and listening comprehension are taken into consideration, but the difference between groups is still not significant.

Theoretically, this no-significant-difference could be for one of two reasons: Either the instruction in the four groups did not have any effect at all, i.e. the students learnt too little to measure a significant difference, or the instruction (our only variable in the comparison) – experienced instructor for two groups vs. largely independent online learning vs. two supervised, experienced graduate teaching assistants – did not have a significantly different effect. To establish whether or not the impact of another semester of German was high enough so that there could have been a significant difference between groups, we considered the GER102 students from our study for whom we also had an overall grade for its prerequisite course – GER101. We randomly selected 14 students from each group and thus based our calculations on four groups with 56 subjects with two observations each. Again it is noticeable that we have no indication of a significant difference between the groups (F < F crit : 1.16 < 2.69, p < .05). However, there is a significant difference between the sets of grades for GER101 and GER102, indicating that a further semester of instruction has made a difference for the students in all four groups (F > F crit : 7.6 > 3.93, p < .05). This finding was confirmed by a t-test for paired samples which was based on all students (N=64) for whom we have both the overall grade for GER101 and GER102 (t > t crit : 5.72 > 2.0, p < .05). To make sure that students self-selecting for the groups did not unduly skew the results, we computed the same two-factor with replication ANOVA as before using the group setup of GER101 – the course most of the students had taken the semester before. Again there was no significant difference between these groups (F < F crit : 2.09 < 2.21, p < .05). This is an indication that in terms of attainment students were distributed reasonably evenly over the different groups in GER 102 as well as in GER 101 the semester(s) before.

What can we conclude from the fact that there was no significant difference in student attainment between the trial group and any of the three control groups?

While no single study, nor any meta-analysis on its own can so far give a definitive answer on ICT effectiveness, a series of systematic syntheses of findings related to one particular variable such as learning style or writing quality might produce more valuable insights into the potential impact of technologies on learning processes and outcomes. (Felix, 2005a, p. 17)

Such syntheses, of course, necessitate that individual studies are carried out before. As Felix, we believe it is more productive to look at more complex, rather than isolated, simple variables because they retain at least some of the learning context. We wanted to know whether our cluster-like variable ‘instruction’ had an influence on possible student achievement in our courses. When we take into consideration the specific features of our trial group (group 3), we can conclude that our new online learning design for distance education gives students the same learning opportunity as students in our on-campus groups receive, if

· students want to study online (Upon registration our students had a genuine choice to enrol in one of the on-campus sections of the course or to enrol in the online pilot study, in that sense they were self-selecting.)

· they study the language online for a restricted period of time, in our case – one semester (We have no evidence either way to make any claims about the effects of prolonged or even exclusive online language instruction. Given the human nature of communication we tend to be careful in our evaluation of online learning designs.)

· most importantly, they follow a clearly structured, transparent task-based language teaching framework.

An evaluation always needs to consider the entire curriculum of which information and communication technologies form just one part. The value of our online environment can only be defined within the context set by university and department standards and, of course, our task-based and communicative approach to language learning and teaching.

4. Conclusion

Similar to other studies of development of learning activities (e.g., Ros i Solé & Mardomingo, 2004) we found that task-based language learning provided a solid and flexible framework for the instructional design of our courses. With Doughty and Long (2003, p. 53) we stress that design principles rather than delivery media influence the viability of language learning. Our task-based approach facilitated the effectiveness of the online course delivery. The result of our quantitative analysis is likely based on the fact that our online learning environment and on-campus learning environment both provided conditions conducive to very similar positive learning outcomes. We advised that the conclusions drawn from this study need to take into account the context in which learning takes place, including the place of the online course within the entire curriculum. In combining the quantitative study and the questionnaire study, we were able to offer insights to the interpretation of the quantitative analysis. For example, the pilot group students overall had no technological difficulties and they found the instructional feedback extremely helpful, two possible reasons why there was no significant difference between this group and the on-campus students. In observing the running of our distance education courses since their inception we can confirm Felix’ notion that “learners who have chosen online courses [have mainly chosen them because they] prefer them to on-campus offerings” (2002, p. 3).

Bibliography

Börner, W. (1999). Fremdsprachliche Lernaufgaben. Zeitschrift für Fremdsprachenforschung, 10(2), 209-230.

Burston, J. (2003). Proving IT Works. CALICO Journal, 20(2), 219-266.

Bygate, M., Skehan, P., & Swain, M. (2001). Researching Pedagogic Tasks: Second Language Learning, Teaching and Testing. Harlow: Pearson Education.

Dörnyei, Z. (2003). Questionnaires in Second Language Research. Construction, Administration, and Processing. Mahwah/New Jersey and London: Lawrence Erlbaum Associates, Publishers.

Doughty, C. J., & Long, M. H. (2003). Optimal Psycholinguistic Environments for Distance Foreign Language Learning. Language Learning & Technology, 7(3), 50-80.

Drashkaba, T. (2005). Second Language Acquisition Online: Investigating Input and Output by Beginners. Unpublished Master's Thesis, University of Waterloo, Waterloo.

Eckerth, J. (2003). Fremdsprachenerwerb in aufgabenbasierten Interaktionen. Tübingen: Narr.

Egbert, J., & Yang, Y.-F. D. (2004). Mediating the Digital Divide in CALL Classrooms: Promoting Effective Language Tasks in Limited Technology Contexts. ReCALL, 16(2), 280-291.

Eigler, F. (2001). Designing a Third-Year German Course for a Content-Oriented, Task-Based Curriculum. Unterrichtspraxis, 34(2), 107-118.

Ellis, R. (2003). Task-based Language Learning and Teaching. Oxford: Oxford: Oxford University Press.

Felix, U. (2001). The Web's Potential for Language Learning: The Student's Perspective. ReCALL, 13(1), 47-58.

Felix, U. (2002). The Web as a Vehicle for Constructivist Approaches in Language Teaching. ReCALL, 14(1), 2-15.

Felix, U. (2003). Pedagogy on the Line: Identifying and Closing the Missing Links. In U. Felix (Ed.), Language Learning Online: Towards Best Practice (pp. 220). Lisse: Swets & Zeitlinger.

Felix, U. (2004). A Multivariate Analysis of Secondary Students' Experience of Web-Based Language Acquisition. ReCALL, 16(1), 237-249.

Felix, U. (2005a). Analysing Recent CALL Effectiveness Research - Towards a Common Agenda. ReCALL, 18(1), 1-32.

Felix, U. (2005b). What Do Meta-Analyses Tell Us about CALL Effectiveness? ReCALL, 17(2), 269-288.

Hampel, R. (2003). Theoretical Perspectives and New Practices in Audio-Graphic Conferencing for Language Learning. ReCALL, 15(1), 21-36.

Hampel, R., & Stickler, U. (2005). New Skills for New Classrooms: Training Tutors to Teach Languages Online. ReCALL, 18(4), 311-326.

Hoven, D. (2006). Communicating and Interacting: An Exploration of the Changing Roles of Media in CALL/CMC. CALICO, 23(2), 233-256.

Jamieson, J., Chapelle, C. A., & Preiss, S. (2005). CALL Evaluation by Developers, a Teacher, and Students. CALICO, 23(1), 93-138.

Jiang, A. (2006). The Application of the Task-Based Approach in the UW German 101 Distance Education Course. Unpublished Master's Research Paper, University of Waterloo, Waterloo.

Knight, P. (2005). Learner Interaction Using E-Mail: The Effects of Task Modification. ReCALL, 17(1), 101-121.

Krashen, S. D. (1982). Principles and Practice in Second Language Acquisition. Oxford: Pergamon.

Lee, M. J. (2003). Error Analysis of Written Texts by Learners of German as a Foreign Language. Unpublished Master's Thesis, University of Waterloo, Waterloo.

Levy, M., & Kennedy, C. (2004). A Task-Cycling Pedagogy Using Stimulated Reflection and Audio-Conferencing in Foreign Language Learning. Language Learning & Technology, 8(2), 50-69.

Liebscher, G., & Schulze, M. (2002). German Online – A Course for Beginners. Forum Deutsch, 1/2002, 8-12.

Liebscher, G., & Schulze, M. (2004). Task-Based Learning and Community of Practice in an Elementary German Course. Unpublished manuscript.

Lovik, T. A., Guy, J. D., & Chavez, M. (2002). Vorsprung: An Introduction to the German Language and Culture for Communication (Updated Edition). Boston: Houghton Mifflin.

Lovik, T. A., Guy, J. D., & Chavez, M. (2007). Vorsprung: A Communicative Introduction to the German language and culture. Boston: Houghton Mifflin.

Ros i Solé, C., & Mardomingo, R. (2004). Trayectorias: A New Model for Online Task-Based Learning. ReCALL, 16(1), 145-157.

Rosell-Aguilar, F. (2005). Task Design for Audiographic Conferencing: Promoting Beginner Oral Interaction in Distance Language Learning. Computer Assisted Language Learning, 18(5), 417-442.

Sampson, N. (2003). Meeting the needs of distance learners. Language Learning & Technology, 7(3), 103-118.

Skehan, P. (1996). A Framework for the Implementation of Task-Based Instruction. Applied Linguistics, 22, 27-57.

Skehan, P. (1998). A Cognitive Approach to Language Learning. Oxford: Oxford University Press.

Skehan, P. (2003). Focus on Form, Tasks, and Technology. Computer Assisted Language Learning, 16(5), 391-412.

Spodark, E. (2004). French in Cyberspace: An Online French Course for Undergraduates. CALICO, 22(1), 83-101.

Stickler, U., & Hauck, M. (Eds.). (2006). What does it take to teach online? Towards a Pedagogy for Online Language Teaching and Learning. Special Issue of the CALICO Journal (Vol. 23.3). San Marcos: CALICO.

Strambi, A., & Bouvet, E. (2003). Flexibility and Interaction at a Distance: A Mixed-Mode Environment for Language Learning. Language Learning & Technology, 7(3), 81-102.

Swain, M. (1985). Communicative Competence: Some Roles of Comprehensible Input and Comprehensible Output in its Development. In S. M. Gass & C. G. Madden (Eds.), Input in Second Language Acquisition (pp. 235-253). Rowley: Newbury House.

Willis, J. (1996). A framework for task-based learning. Harlow, UK: Longman.

Biodata

Grit Liebscher (PhD Texas at Austin) is Associate Professor of German and an applied linguist with special interest in discourse analysis and sociolinguistics as well as second language acquisition. She has recently published on code-switching and L1 use in the L2 classroom. (http://germanicandslavic.uwaterloo.ca/~gliebsch)

Su Mei Zhen is Associate Professor of English in the Foreign Language College at the Central South University in China. She was a Visiting Research Scholar at University of Waterloo in 2003/4.

Mathias Schulze (PhD UMIST, Manchester) is Associate Professor of German. His research focus is the application of linguistic theory to computer-assisted language learning (CALL). He has published on language technology in CALL and the acquisition of grammar through CALL. (http://germanicandslavic.uwaterloo.ca/~mschulze)